- Introduction machine learning

- Mathematics for Machine Learning

Mathematics for Machine Learning

Machine learning may seem like magic, but behind that magic is mathematics. To truly understand how algorithms work, you need to be familiar with key mathematical concepts. This chapter will cover the essentials of Linear Algebra, Probability and Statistics, and Calculus. These form the bedrock of machine learning, helping you build better models, optimize algorithms, and interpret results effectively.

2.1 Linear Algebra Essentials

Linear algebra deals with vectors, matrices, and operations involving them. Machine learning algorithms often rely on linear algebra to represent and manipulate data efficiently.

Key Concepts in Linear Algebra

- Vectors

A vector is an array of numbers arranged in a single row (row vector) or column (column vector). Vectors represent points in space, such as features in a dataset. Example:

A 3-dimensional vector:

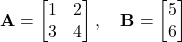

- Matrices

A matrix is a two-dimensional array of numbers. In machine learning, matrices are used to represent datasets, where each row is a data point and each column is a feature. Example:

A 3×2 matrix:

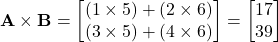

- Matrix Operations

- Addition: Matrices of the same size can be added element-wise.

- Scalar Multiplication: Multiply every element in a matrix by a constant.

- Matrix Multiplication: Combining two matrices to produce a new matrix.

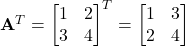

- Transpose of a Matrix

Flipping a matrix along its diagonal. Rows become columns and vice-versa. Example:

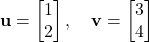

- Dot Product

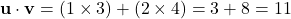

The dot product of two vectors measures how similar they are. Example:

Why Linear Algebra Matters in ML

- Data Representation: Datasets are often stored as matrices, and individual features are represented as vectors.

- Transformations: Algorithms like PCA (Principal Component Analysis) use linear algebra to reduce dimensions.

- Neural Networks: Operations in neural networks rely heavily on matrix multiplication.

2.2 Probability and Statistics Overview

Probability and statistics help machine learning models understand uncertainty and variation in data. Understanding these concepts allows you to build models that can make predictions reliably.

Key Concepts in Probability

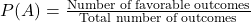

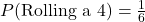

- Probability Basics

Probability measures the likelihood of an event occurring. Formula:

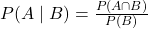

- Conditional Probability

The probability of event A happening given that event B has already happened. Formula:

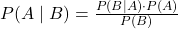

- Bayes’ Theorem

Helps in updating the probability of an event based on new evidence. Formula:

- Probability Distribution

- Normal Distribution: Bell-shaped curve; many natural phenomena follow this distribution.

- Bernoulli Distribution: Binary outcomes (e.g., coin flips).

- Poisson Distribution: Counts of events over time (e.g., number of calls to a customer service line).

Key Concepts in Statistics

- Descriptive Statistics

- Mean: Average value.

- Median: Middle value in a sorted list.

- Mode: Most frequent value.

- Standard Deviation: Measure of data spread.

- Inferential Statistics

- Hypothesis Testing: Testing assumptions about data (e.g., A/B testing).

- Confidence Intervals: Range where a population parameter likely falls.

Why Probability and Statistics Matter in ML

- Model Evaluation: Metrics like accuracy, precision, and recall rely on probability.

- Uncertainty Management: Probabilistic models like Naive Bayes use these concepts.

- Data Insights: Statistical analysis helps in understanding patterns in data.

2.3 Calculus Basics for ML

Calculus helps optimize machine learning models by understanding how changes in input affect the output. In ML, calculus is primarily used for gradient-based optimization.

Key Concepts in Calculus

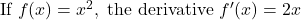

- Derivatives

A derivative measures the rate of change of a function. Example:

- Gradient

The gradient is the vector of partial derivatives, showing the direction of steepest ascent. Example:![Rendered by QuickLaTeX.com \text{For } f(x, y) = x^2 + y^2: \quad \nabla f = \left[ \frac{\partial f}{\partial x}, \frac{\partial f}{\partial y} \right] = [2x, 2y]](https://alltechprojects.com/wp-content/ql-cache/quicklatex.com-0c10f5feeac30a80a2a84e9e7d6ac9fa_l3.png)

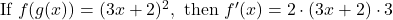

- Chain Rule

Used when a function is composed of other functions. Example:

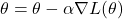

- Optimization with Gradients

Gradient Descent is an optimization technique to minimize the loss function in ML models. The idea is to update model parameters by taking steps in the direction of the negative gradient. Update Rule:

- θ\theta: Model parameter

- α\alpha: Learning rate

- ∇L(θ)\nabla L(\theta): Gradient of the loss function

Why Calculus Matters in ML

- Training Models: Neural networks use calculus for backpropagation.

- Optimization: Minimizing loss functions to improve model accuracy.

- Learning Rates: Fine-tuning learning rates for better convergence.

Conclusion

In this chapter, we’ve covered the essential mathematical tools needed for machine learning: Linear Algebra, Probability and Statistics, and Calculus. Mastering these concepts will give you a deeper understanding of how algorithms work under the hood and help you develop more robust models.