Table of Contents

Introduction

In the ever-evolving world of machine learning, understanding foundational concepts like Bayes’ Theorem is crucial. This theorem, named after the statistician Thomas Bayes, provides a framework for updating probabilities based on new evidence. Its significance in machine learning cannot be overstated, as it powers various algorithms and applications, including classification tasks, spam detection, and anomaly detection.

This article provides an in-depth exploration of Bayes’ Theorem, covering its principles, practical implications, and how it underpins algorithms like Naive Bayes. By the end of this guide, you’ll have a detailed understanding of how Bayes’ Theorem works, why it is so valuable in machine learning, and how to apply it to real-world problems.

What is Bayes Theorem in Machine Learning?

Bayes’ Theorem is a mathematical formula that describes the probability of an event based on prior knowledge of conditions that might be related to the event. In machine learning, it helps us predict outcomes by updating our beliefs or probabilities as new data becomes available. This concept of iterative updating makes it particularly effective for models that deal with uncertainty or incomplete data.

For instance, consider a scenario where a machine learning model predicts whether an email is spam. Before analyzing the content, the model might rely on historical data about spam emails. After processing specific keywords in the email, the model updates its prediction using Bayes’ Theorem, providing a more accurate classification.

Machine learning heavily relies on probability theory, and Bayes’ Theorem bridges the gap between theoretical probabilities and practical applications. By combining prior knowledge (historical data) with current evidence, Bayes’ Theorem enables models to make well-informed predictions.

A Simple Statement of Bayes’ Theorem

At its core, Bayes’ Theorem can be expressed in the following formula:

![]()

Here’s what each term represents:

:

:

The probability of event occurring given that event

occurring given that event  has occurred. This is known as the posterior probability.

has occurred. This is known as the posterior probability. :

:

The probability of event occurring given that event

occurring given that event  has occurred. This is the likelihood.

has occurred. This is the likelihood. :

:

The probability of event occurring on its own, also called the prior probability.

occurring on its own, also called the prior probability. :

:

The total probability of event , accounting for all possible outcomes.

, accounting for all possible outcomes.

Explanation

To put it simply, Bayes’ Theorem calculates the probability of a hypothesis (event ![]() ) being true after taking into account new evidence (event

) being true after taking into account new evidence (event ![]() ).

).

This theorem is particularly powerful because it allows for the incorporation of new evidence without discarding the existing knowledge.

Relevance in Machine Learning

This updating process is central to many machine learning algorithms, where the model learns incrementally by processing new data.

The Principle of Bayes’ Theorem

The principle of Bayes’ Theorem lies in its ability to update probabilities based on new information. This makes it invaluable for decision-making in uncertain environments, a frequent scenario in machine learning.

Let’s break this down:

- Prior Probability (

):

):

This represents the initial belief about the likelihood of an event before considering new evidence.

Example: In a medical diagnosis, the prior probability might be the known prevalence of a disease in a population. - Likelihood (

):

):

This quantifies how well the new evidence supports the hypothesis.

Example: Using the same example, this could be the probability of observing certain symptoms if the patient has the disease. - Posterior Probability (

):

):

This is the updated probability after combining the prior probability and the likelihood.

Example: It tells us how likely the hypothesis is after considering the new evidence.

Explanation

This iterative process of updating beliefs allows machine learning models to improve over time as they are exposed to more data.

Application in Machine Learning

Bayes’ Theorem is particularly useful in classification problems, where the model predicts the category of an input based on observed features.

What is the Meaning of Bayes?

The term “Bayes” comes from Thomas Bayes, an 18th-century statistician who introduced a method for calculating conditional probabilities. His work, later expanded by Pierre-Simon Laplace, laid the foundation for what we now call Bayesian inference.

The meaning of Bayes in a modern context is rooted in the concept of learning from data. Bayes’ Theorem formalizes how we update our understanding of the world based on evidence. It encapsulates the essence of probabilistic reasoning, which is fundamental to artificial intelligence and machine learning.

In essence, Bayes represents a systematic way to handle uncertainty. Whether it’s diagnosing a disease, predicting customer behavior, or filtering spam, Bayes’ Theorem provides a structured approach to decision-making under uncertainty.

Naive Bayes Theorem in Machine Learning

One of the most well-known applications of Bayes’ Theorem in machine learning is the Naive Bayes classifier. Despite its simplicity, Naive Bayes is a powerful algorithm for many classification problems.

How Naive Bayes Works

The Naive Bayes classifier applies Bayes’ Theorem with the simplifying assumption that all features are conditionally independent, given the class label. While this assumption is often unrealistic in practice, it simplifies the computations and makes the algorithm highly efficient.

The probability of a class ![]() given a set of features

given a set of features ![]() is calculated as:

is calculated as:

![]()

Applications of Naive Bayes

- Spam Detection:

Classifying emails as spam or not spam based on keywords. - Sentiment Analysis:

Determining whether a review is positive or negative. - Medical Diagnosis:

Predicting the presence of a disease based on symptoms. - Text Classification:

Categorizing documents into predefined classes.

Why Use Naive Bayes?

Naive Bayes is particularly effective when the feature space is large, as it is computationally efficient and easy to implement.

Practical Example of Bayes’ Theorem in Machine Learning

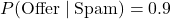

To understand the practical application of Bayes’ Theorem, let’s revisit the spam classification problem:

Problem

We want to classify whether an email containing the word “offer” is spam.

Given Data

:

:

20% of emails are spam. :

:

80% of emails are not spam. :

:

90% of spam emails contain the word “offer.” :

:

10% of non-spam emails contain the word “offer.” :

:

26% of all emails contain the word “offer.”

Solution

Using Bayes’ Theorem:

![]()

Substituting the values:

![]()

Interpretation

This means there is approximately a 69.2% probability that an email containing the word “offer” is spam.

Applications of Bayes’ Theorem in Real-World Machine Learning

Bayes’ Theorem has broad applications in various machine learning domains, including:

a. Spam Filtering

Email systems use Bayes’ Theorem to classify messages as spam or not based on their content. Features like keywords, sender information, and formatting are analyzed to calculate probabilities.

b. Medical Diagnosis

Bayesian models assist in diagnosing diseases by considering symptoms and their likelihood given different conditions. This approach improves diagnostic accuracy and helps in personalized medicine.

c. Recommendation Systems

Bayesian inference is used to predict user preferences based on past interactions, enabling more accurate product recommendations.

d. Fraud Detection

Bayes’ Theorem helps detect fraudulent transactions by analyzing patterns and calculating the likelihood of anomalies.

e. Anomaly Detection

Bayesian methods identify unusual patterns in data, making them valuable for cybersecurity and network monitoring.

Conclusion

Bayes’ Theorem is a fundamental concept in probability and machine learning, offering a systematic approach to updating beliefs based on evidence. From powering algorithms like Naive Bayes to enabling applications in spam filtering, medical diagnosis, and fraud detection, Bayes’ Theorem is a cornerstone of modern data science.

By mastering this concept, machine learning practitioners can build more effective and efficient models that handle uncertainty with ease. Whether you’re a beginner or an expert, understanding Bayes’ Theorem will significantly enhance your ability to tackle complex, data-driven challenges.